Our Brains are Like Software, Not Hardware

In 1946, John von Neumann and his wife, Klara, successfully upgraded ENIAC to enable stored-program computing. This project kicked off the modern computer age, making ENIAC the first fully electronic general-purpose computer. The von Neumann architecture, which includes a Central Processing Unit, arithmetic logic unit, memory, input and output mechanisms, external storage, and control units that bus information around, has remained the dominant architecture for computers up until this day. It’s how the computer I’m using to type this article on is architected, and chances are, the one you’re using to read it, too.

What doesn’t get told as often is why ENIAC was upgraded. John von Neumann had secured a contract with the U.S. Navy to attempt to use computers to predict the weather. The mathematical models had been developed for weather prediction already, but performing the calculations required seemed out of reach. One researcher theorized that he would need 64,000 people just to do the math. An individual using a desk calculator would need eight years. If humans could use computers to dynamically perform these calculations, we could effectively see into the future — and by 1955, we could. Computers could accurately provide a 24-hour weather forecast in just ten minutes. The world has never been the same.

After the Navy project concluded, von Neumann set to work on his next project — using mathematical modeling to understand human cognition. It seemed just as dynamic, enigmatic, and profound as the weather. While von Neumann died in 1957, his unfinished paper, “The Computer and the Brain,” has remained an influential work. In it, von Neumann hypothesized that components of a computer might be likened to human organs, with both systems relying on electrical impulses to ferry, process, and express information.

The problem is that von Neumann’s hypothesis turned out to be wrong. Neuroscience is showing us that our brains are structured much differently than von Neumann imagined. Our brains don’t have singular components, store our memories like files, or bus information around in pre-determined paths. Thinking of the brain in the same way as your computer hardware is problematic. And while some critics have suggested we abandon the metaphor altogether, I’m inclined to invoke George Box’s sentiment of statistical models here: “All models are wrong, some are useful.” Or, to apply this to words instead of numbers, all metaphors are wrong, but some are useful.

Instead of thinking of the brain as hardware, it might be more useful to think of it as software — particularly as object-oriented programming.

Object-oriented programming (OOP) is the backbone of many modern programming languages, such as C#, Java, Python, PHP, and Ruby, just to name a few. OOP is particularly suited to this metaphor because its design was inspired by biology. Its inventor, Alan Kay, reflected on how his dual degree helped him see the underlying pattern from which OOP is built.

”My math major had centered on abstract algebras with their few operations generally applying to many structures. My biology major had focused on both cell metabolism and larger scale morphogenesis with its notions of simple mechanisms controlling complex processes and one kind of building block able to differentiate into all needed building blocks.”

In the late 1960’s Kay was taking a critical look at the programming language LISP, when he observed some quirks. “The pure language was supposed to be based on functions, but its most important components—such as lambda expressions, quotes, and [conditionals] —were not functions at all, and instead were called special forms.” This got Kay thinking — what if all of these components were treated like cells in the human body? Cells have boundaries, the membrane, so they can “act as components by presenting a uniform interface to the world.” Kay incorporated this design into his programming language, Smalltalk, and named the “cells” of the system “objects.” As Kay describes:

“Instead of dividing computer stuff’ into things each less strong than the whole—such as data structures, procedures, and functions that are the usual paraphernalia of programming languages—each Smalltalk object is a recursion of the entire possibilities of the computer. Thus its semantics are a bit like having thousands and thousands of computers all hooked together by a very fast network. Questions of concrete representation can thus be postponed almost indefinitely because we are mainly concerned that the computers behave appropriately, and are interested in particular strategies only if the results are off or come back too slowly…Smalltalk’s contribution is a new design paradigm — which I called object-oriented.”

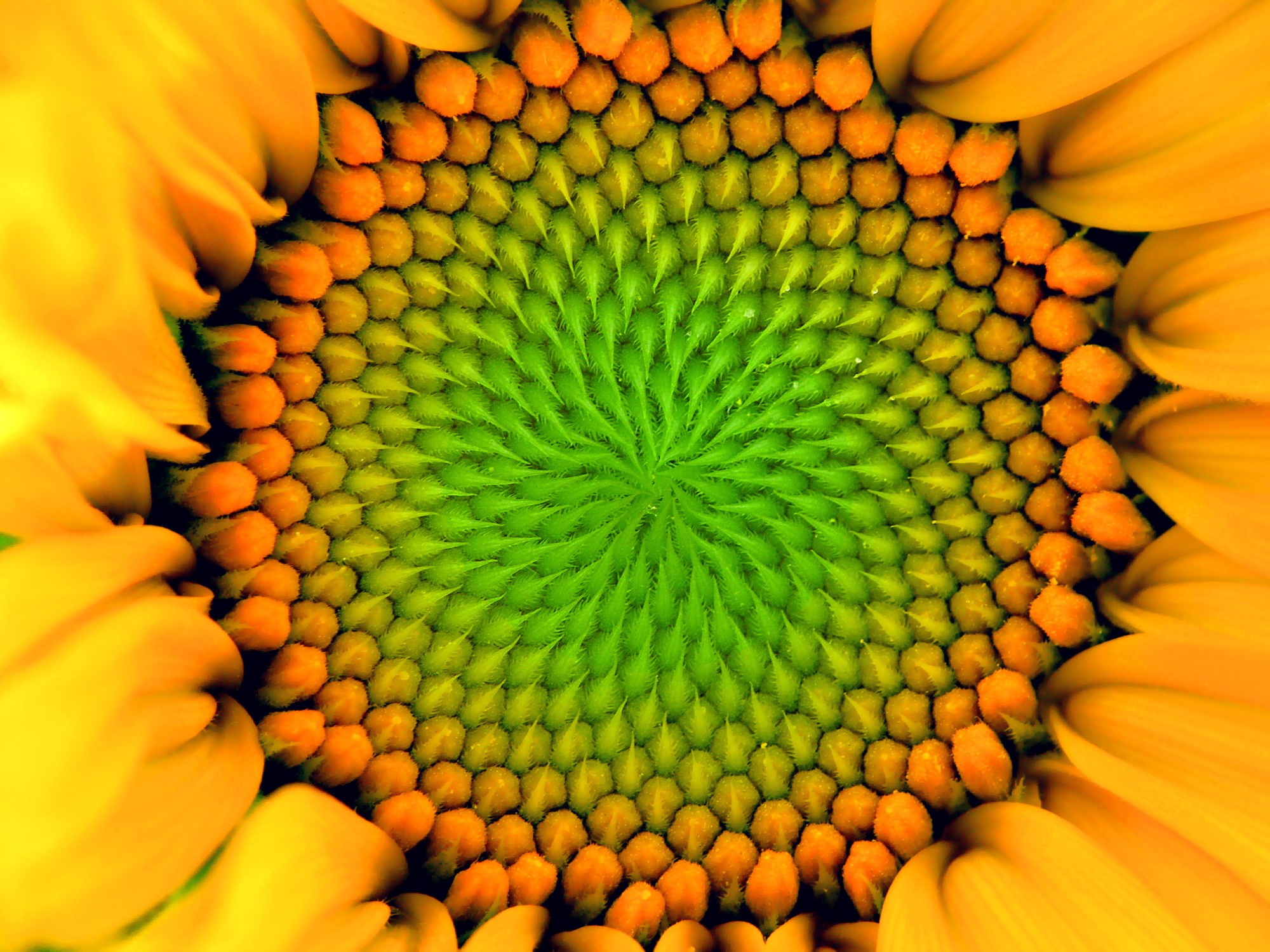

When we’re applying the metaphor of a computer to human cognition, the notion of recursion, or self-similar repetition, makes a lot of sense. Recursion occurs all the time in nature. If you’ve ever seen the branches of a tree, the center of a flower, or the crystals of a snowflake, you’ve seen the power of recursion at play. Simple rules, repeated in a self-similar manner, can create extraordinarily complex structures. Even the neurons in our brains are comprised of this underlying architecture.

It would make sense that our brains would conform to the architecture that the rest of nature uses, and some of the latest neuroscience points in this direction, particularly the theory of constructed emotion, which was put forth by neuroscientist Lisa Feldman Barrett.

Let’s look at some of the physiology and neurology that’s presented in this theory, shall we?

Sensing

Like computers, humans convert physical energy into electrical impulses and our bodies are equipped with specialized neurons that help us collect all sorts of information about the world around us. There are several different categories of senses:

- Exteroception - external stimuli, such as sight, hearing, taste, touch, smell, and temperature

- Proprioception - body movement and position

- Interoception - internal stimuli, such as heart rate, hunger, thirst, and respiration

The amount of data our body collects is significant. One study from 2006 found “that the human retina can transmit data at roughly 10 million bits per second. By comparison, an Ethernet can transmit information between computers at speeds of 10 to 100 million bits per second.” (Ethernet connections are much faster today.) There are also specialized cells within your eye that send messages at different rates, similar to how there are different internet protocols. Some are designed to send information fast and some are slower because they’re designed for fidelity.

Perceiving

Once we have sensory input, we use perception to select, organize, interpret, and cognitively experience the information we’ve absorbed. Our brains don’t work in the same way as the von Neumann architecture. Components are not singularly responsible for one aspect of the system. Thinking of the brain as a piece of hardware that can be reduced to its components doesn’t work. Our brain is an interconnected system that operates as a single unit.

The brain has one goal, according to Lisa Feldman Barrett — to efficiently regulate our energy by making predictions about how we should behave. We rely on past experiences and sensory information, such as interoception patterns, to make those predictions. The assessment of whether or not our behavior was effective gets incorporated into our experience set to be used the next time we encounter a similar situation. This system structure is referred to as a closed loop in computing and other fields.

While we used to think that our bodies responded to stimuli, but what our perception is actually doing is 1) anticipating needs and 2) preparing the body to meet potential needs before they arise. Most of this happens outside of our consciousness through a process called allostasis.

Our brain has some amazing strategies, which Feldman Barrett describes in her books, that it uses to achieve this goal. Here are just a few:

- Compression — The way our brain sifts through and manages the onslaught of sensory information it’s working with is similar to how we use information hiding and abstractions while we code. We reduce redundancy by filtering out some details and creating a kind of summary of the information that is relevant. This process can keep going, resulting in lots of different layers of synthesis.

- Concepts — We are able to quickly and dynamically categorize, label, and assign meaning to these abstract patterns. Our brains construct concepts that are remarkably similar to a specific type of object in OOP called a class. A class is like a blueprint. It has a name and a list of attributes. You can build a whole neighborhood of houses from a single blueprint. Each time you build a house, you instantiate the class. Classes (blueprints) and instances of classes (houses) are both objects. In our brains, this categorization happens in the moment, what we might say at run-time in computer terms. Concept labels are created on the fly by what we imply from our abstractions. Driving through a subdivision, you don’t have to over-think what to call the dwellings you see — they’re houses. It looks like a house, the context makes sense for it being a house, so you infer that you’re looking at a house. For programmers out there, this process is similar to how we might use “duck typing.”

- Affect — Our bodies are continually monitoring our internal state through interoception, and this state of feeling that we experience is referred to as affect, particularly in psychology. Our brains identify patterns of physical sensations, such as a racing heart, a flushed face, or the feeling of butterflies in our stomachs. These sensations are mapped, often outside of consciousness, based on intensity (idle to activated) and valence (pleasant to unpleasant) to form patterns. The term “affective empathy” is often used to describe how people use interoception patterns as a means of understanding what another person might be feeling.

- Emotion - We use compression to extrapolate patterns of affect, combined with abstractions of past experiences, into a concept which we call an emotion. For example, I have a concept for an emotional blueprint I call “nervous.” I’ve put together a pattern of attributes for it based on interoception — my heart races, my lips get dry, and my body movement becomes twitchy. I also include relevant data from past experiences — “nervous” has shown up for me more frequently in small group settings than in large groups. You might have relevant attributes that are different from mine. For example, my palms don’t sweat and yours might. For you, “nervous” might be associated with large groups more often than small ones. The supporting evidence from the theory of constructed emotions points to a conclusion that many people find disconcerting and different from how we’ve been taught that emotions work. There is no universal “fingerprint” of an emotion. We each experience and express emotions differently. There is also compelling evidence that the idea of universal facial expressions (fear, disgust, anger, etc.) isn’t accurate either. Feldman Barrett goes into the research of this thoroughly in her book How Emotions are Made.

- Emotional Instances — When I am in a context that matches the pattern of attributes I’ve labeled “nervous” — say, I’m facilitating a workshop with eight participants — I’m experiencing an instance of that emotional category. My brain will predict how best to react given the context and respond in anticipation through allostasis. For me, this means I’ll start talking more, my speech becomes more rapid, and I smile broadly. These behavioral strategies have worked for me in the past when I’m nervous, so they get called upon again. For you, the anticipated behavior your brain calls upon might be to talk less instead of talking more, like I do. This is similar to how OOP languages use methods, or procedures that determine the behavior of a class. Your behavior can dynamically change in the moment, too. For example, if I notice that I’m talking too fast, I can change the behavior that was originally called upon and choose the behavior of talking slower instead. This is similar to how some OOP languages use “monkey patching.”

- Simulation — Even without incoming sensory input, your brain automatically creates what is basically a hallucination to help it make sense of what’s happening in the world and assign meaning to concepts. All mental activity is based in simulation, even exteroperceptive sensing. What we see, hear, touch, taste and smell are simulations of the world, not reactions to it. For example, I think a skunk’s odor kind of smells sweet. Having grown up in a rural area, I have fond memories of long drives in the country. This positive association alters how I interpret a skunk’s olfactory pattern. My simulation of the smell is much different than a lot of other people who react in disgust. There are also unique ways in which people build simulations. For example, I have a sensory wiring known as aphantasia. I don’t see images during simulation, but I do have an internal narrator that is incredibly chatty. My aunt is the opposite. Her visual simulation is so vivid she can’t tell the difference between reading a book and watching a movie, but she has no inner monologue. Neither of these ways of simulation is better, just different. From a software point of view, simulation is kind of like a built-in testing environment with a sophisticated continuous deployment pipeline.

What all of this means is that we have more control over our emotions than we’d previously thought. We can fix bugs in our code and we can fix bugs in our brains. To me, that’s empowering. Understanding this means that I can work to synchronize my mental concepts with the people around me. It’s similar to how I might use a UML class diagram or domain model to align with my team on how we’ll name objects in a codebase.

Does a skunk smell sweet or stinky to you? Why? What experiences and feelings have created that experience for you? And how can I change my mental concepts to better understand your point of view? To me, that’s empathy.

Before I sign off, there are a couple of caveats I’d like to share. First, while I decided to use object-oriented programming as the anchor to the metaphor, I’m not trying to play into the OOP vs. functional programming debate. Both are valid and useful forms of programming. One is not better than the other and context is an important part of choosing which language ecosystem is best for your project.

Second, I didn’t mention machine learning here. That’s not because I overlooked it but because it’s a deep topic that requires a lot of nuance to discuss. While there are many paradigms of machine learning that are based on the human brain, such as deep learning and neural networks, there is some debate as to how close those models are to the way the brain actually works. I’m personally still digging into the research to try to understand it better so if this is your area of expertise, I’d love to hear from you in the comments. (Of course, I’d love to hear from everyone in the comments. :)

Feldman Barrett does describe how the brain uses a closed loop construct where learning is incorporated back into the dataset that we use to make decisions and how we use statistical learning but is very clear that’s not the only way we acquire understanding about the world. However, since this topic could take up a whole article in and of itself, I decided to intentionally set the topic of machine learning aside for another day.

Also, a HUGE shout out to M. Scott Ford, who collaborated with me closely on this article. It’s a joy to find someone in your life who will geek out with you on the most obscure topics and push you to make your work stronger and better. I used to have the emotion of “irritated” when Scott would present critical objections to my ideas, but over the years I’ve remapped this pattern to “grateful frustration.” (Although, I’m sure there’s a German word that more succinctly captures this sentiment.) In any case, thanks Scott. You’re the best.

What are your thoughts? Does describing human perception in terms of OOP help you understand what’s going on in your brain a little bit better? Are there new things that you learned? Let’s keep the conversation going in the comments.

Update: September 16, 2021 — I meant to mention alexithymia in this article, which is a trait that makes it difficult to ascribe words to different feeling states. Like with software, naming can be really, really hard.

Want to be alerted when we publish future blogs? Sign up for our newsletter!